Hedgehog Release and Imminent Token Distribution

Hedgehogs! We got hedghogs everywhere! We give you a deep dive into recent development and what has been happening at Unigrid. What progress has been made and what's the next direction?

It's about time!

After many months of planning and development, we are finally ready to move on to the next stage in the development of Unigrid.

Our next generation network that we have lovingly dubbed Hedgehog is now imminent for a first release. This first version will be released with support for gridnode sporks and allow us to mint tokens and control settings and properties on the network. It will also test the peer-to-peer functionality, server and client components, REST capabilities and all the other implemented features.

In this somewhat drawn-out post I will take the opportunity to explain Hedgehog, what it is and what makes it different from anything else on the market. I will also tell you a little bit about what we are working on and what will happen next. We will also cover token distribution, how minting on the new network actually works and what we need from our early backers in order to finalize distribution.

While I tried to explain the topics in this post as simply as I could I still want to apologize for some of the technical details in this post. Unless you are a software engineer, some of it might be difficult to fully understand. However, I thought it was important to cover these topics and explain our process thus far.

What is Hedgehog?

The new Hedgehog network drives all the new Unigrid features and handles everything needed for network storage, VPN tunneling, CPU workloads and all the other features planned for the network. The old blockchain and network is currently used for consensus and to verify payments and minting on the network. Eventually, the legacy daemon and its network will be completely replaced by Hedgehog.

The first public version of Hedgehog will handle the new P2P network and all the logic for gridnode sporks. To connect the new network and the legacy network together, a bridge has been created where the legacy daemon (unigridd) now requires Hedgehog for operation and can no longer run without it.

We are still finalizing the distribution, but once completed, Hedgehog will be available here. We will make the repository public as soon as all the mandatory license files and documentation has been completed.

What makes it special?

Not only is Hedgehog a new network, but in order to be able to handle heavy workloads, maximum concurrency and huge amounts of data - we chose to do things differently and rely on a two proven frameworks and technologies; Netty and QUIC.

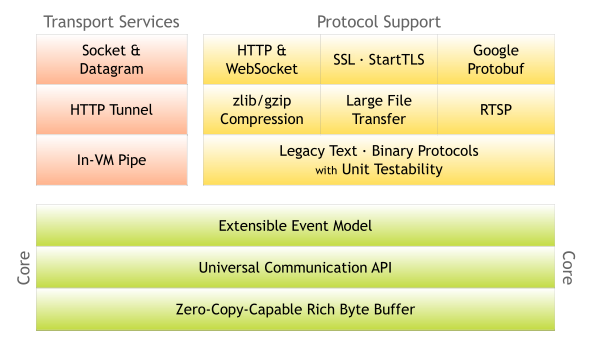

Netty

Netty is an enterprise-grade asynchronous, event-driven network framework especially developed to to handle high throughput in an asynchronous manner. Because of its reliability and speed, the framework is used by many big companies such as Apple, Cisco, Facebook, Google, IBM, Netflix and Spotify, just to name a few.

If we look at some of the other blockchain networks on the market, they are, with a few exceptions, often derived from either Bitcoin or Ethereum. Because of this, their code bases are plagued by a myriad of different problems. Bitcoin suffers from network code that is badly structured and not designed to run in any concurrent way at all. Data structures are locked by semaphores in different threads, causing stalling and slowdowns. On the Ethereum side it's not much better, with sub-optimal data structure code and bottlenecks in how blocks are handled and stored to disk. Because of this, to even be able to operate and sync, Ethereum-derived networks require big Solid-state drives or massive amounts of disk cache to be able to even operate or sync up a full node.

With many enterprise-grade frameworks and libraries developed for Java over the years, using these proven frameworks for Hedgehog will help us mitigate and prevent similar mistakes - especially on the network level.

Pipeline Design

When implementing the network code and the use of Netty, we tried a couple of different approaches to see what works best. We ended up fully embracing Netty's philosophy of pipelining. Essentially, we have a pipeline on the backend that receives network data. On that pipeline live a number of handlers each having a specific task. When a handler receives data it has three options:

- Consume the data completely and tell the pipeline data processing of the message is completed.

- Handle the data partly and send the remaining portion of the data down the pipeline for further processing.

- Ignore the data and send it to the next handler in the pipeline.

Depending on the incoming message, each handler in the pipeline makes one of these choices. There are three different types of handlers:

- Encoders translate outgoing messages into a stream of bytes for transfer to the receiving end.

- Decoders translate an incoming stream of bytes into a local Java object.

- Message handlers take over from a decoder and handle an incoming message. This is where the actual logic for handling decisions based on incoming messages ends up.

This design makes it very easy to add support for additional functionality and message types. When a server or client creates a communication channel we simply create the message pipeline in one go.

QUIC

The new P2P network of Hedgehog utilizes QUIC (Quick UDP Internet Connections) rather than ordinary TCP and makes Unigrid and Hedgehog one of the first blockchain networks to utilize this protocol. It gives us several advantages, such as packet-level encryption, greatly reduced latency on connections and reconnections, reduced transmission overhead, forward error correction and link migration. There are many different implementations of QUIC to choose from and we have chosen an implementation available for Netty (based on Quice from Cloudflare) - a code base that has been under development since December 2020.

Packet Level Encryption

Rather than stream-level encryption as seen with TCP+TLS, QUIC employs packet-level encryption. This means that transmitted packets are encrypted individually with more of the communication being encrypted, leading to increased security.

Forward Error Correction

While ordinary TCP does have error correction, that error correction is built around a checksum number and the use of retransmission and acknowledgement segments in order to be able to detect when a sent transmissions is corrupted. Whenever a segment is correctly transmitted, the receiver has to acknowledge receival to allow the sender to move forward. A sequence number in the TCP header is used to detect out-of-order transmissions. Whenever an error is detected due to the checksum not verifying or due to a missing transfer acknowledgement, the segment in question is retransmitted by the sender. Exactly how this mechanism works is explained very well on Wikipedia.

This results in the TCP protocol being extremely safe with a guarantee of consistent data transfers. This, however, comes at the expense of reduced speed and increased latency.

QUIC does error correction in a completely different way - by using forward error correction and parity information in the transmitted stream itself. This means that under most conditions, the protocol can verify and repair the incoming data using parity information, removing the need for retransmissions. QUIC also allows us to completely remove the error correction. This is especially useful when the received data is a stream of data or data structure where error verification already is an integral part of that data. This is for example true in the case of blocks or data signed with a signature. This makes it very convenient together with networks such as Hedgehog.

Reduced Connection Latency & Transmission Overhead

Because the protocol is based on UDP and UDP itself is extremely simplistic, QUIC connections can get away with much less overhead. One of the reasons for this lower overhead is due to the aforementioned error correction of TCP and another reason is that the handshake and encryption can be built straight into the protocol rather than being an afterthought as is the case with TCP+TLS.

Rather than requiring a handshake, because QUIC is based on UDP, repeat connections on QUIC are instant and require no tunnel or actual connection to be set up between the sender and receiver prior to transmission. This makes the protocol absolutely perfect for peer-to-peer networks such as Hedgehog, as they require a lot of networks scanning, node connections and reconnections.

Imagine a situation where you have a list of 30 peers that you are not connected to, but your local node knows about them and knows exactly how to reach them. You need to query them in order to fetch some fairly simple information, where the average query time for that information is 80ms. Under traditional TCP+TLS and using the numbers above, this would take:

(200ms + 80ms) × 30 = 8400ms = 8.4 seconds

Under QUIC, with zero reconnection times, we would instead end up with:

(0ms + 80ms) × 30 = 2400ms = 2.4 seconds

Not only does QUIC cut down on reconnection times - it also cuts down on overhead and latency, as less superfluous traffic data needs to be sent between the receiver and sender. In fact, when Google deployed and changed to QUIC on one of their CDN networks back in 2018, they instantly saw an increase in throughput of about 40%. If we take this into account and make a very dubious estimate and imagine we would have lost 40% in the time it takes to send these transmissions if we were not using QUIC, we get the following result:

Taking average throughput increase into account:

(0ms + 80ms) × 30 / 1.4 = 1714ms / 1.7 seconds

This is nearly a 500% improvement from the original time! While the above example shows an ideal situation, it also clearly demonstrates the possible advantages of using QUIC as compared to traditional TCP+TLS.

All these advantages make QUIC ideal for a peer-to-peer network such as Hedgehog. Because of the way that peer-to-peer networks work (with constant peer discovery, network scanning and peer queries), it cuts down on overhead and latency even more than in traditional situations.

Current Development

Other than finalizing an initial release of Hedgehog, different parts of the team have been working on a number of implementations and improvements and I will quickly try to cover them.

Janus Wallet Improvements

Ever since the release of the next generation wallet we have continously been improving the functionality and code base. To date, there have been three separate installer updates and over ten separate updates of the wallet itself with the version number now up to 1.0.11. As an end user, you might not even have noticed the updates, as this is automated by the wallet.

Amazon S3 Endpoint

If we look at some of the other projects on the market and in particular other peer-to-peer and blockchain networks, onboarding is extremely painful. If you are an application developer, moving your infrastructure and code bases over to using these networks can be very difficult and sometimes even impossible.

This is a mistake that we don't want to repeat.

To make onboarding simple, we are currently working on an Amazon S3 endpoint that will connect to the block storage of the Hedgehog network. So while the network itself is using blocks and shard groups to store the data, applications and users are blissfully unaware of this fact in the exposed API. Not only is our API similar, it is identical to the offering of Amazon.

Compared to the United States, the copyright laws in Europe are very different, with the European Commission convinced that, above all, open competition is important on the open marketplace. In fact, with this in mind, EU's European Court of Justice ruled API's to be non-copyrightable back in 2012. Of course, as a European business we adhere to these regulations. This allows us to offer an identical API as an access layer to the Unigrid network and cut down onboarding times to zero.

One of our developers, Torekhan, is currently working on the implementation, using S3Mock to verify the behavior and integrating behavioral tests in our test suite.

Easy Dockerized Janus Gridnode Deployment

A few weeks ago, Evan spent some time working on a dockerized gridnode deployment. This docker image allows you to deploy a gridnode on any server with docker installed. There is also an installer script you can use that is tailor-made for Debian and Ubuntu. This script installs everything needed to get going and makes deployment of gridnodes a breeze.

This new dockerized deployment is documented on docs.unigrid.org, under the section "How to run a gridnode".

One-click Gridnode Deployment from Janus

Building on top of the docker images mentioned earlier, Tim is currently working on a more approachable gridnode deployment frontend in the Janus wallet. Essentially, this integration allows for one-click deployments of gridnodes directly from within the wallet itself. When initiated, all you have to do is supply the hostname and login credentials for the server where you want to deploy your node and the wallet will handle the rest. As a teaser, here are some screenshots of the MVP for that functionality;

From this new gridnode panel you can monitor not only the status of your nodes, but also any running deployments and their progress. On each deploying node you can click and monitor the output of the deployment and look for any potential problems.

Mass-deployment of nodes needs to be easy and we believe that this solution provides a pretty elegant way to deploy and monitor many nodes at once.

Desktop Network Storage

Janko and Boris have been working on network storage via desktop. This is achieved by exposing a mounted desktop drive that connects directly to the Unigrid network. In many ways it is similar to services like OneDrive. On the network level, a Unigrid address is automatically assigned to the mounted drive, allowing you to store data on that drive. The network then deducts small amounts of Unigrid from that address as payment for that storage. The drive and the data stored on it will also be accessible via a bucket in the S3 endpoint.

Unigrid vs traditional Cloud services

Apart from the big offerings such as AWS, Azure and Google Cloud, there are many other cloud alternatives and products on the market to choose from. Some of the smaller platforms include RedHat, Oracle Cloud Infrastructure, Digital Ocean, Linode and Cloudways.

Many of these platforms are great. However, they all suffer from three critical faults;

- Inflated price due to expensive upkeep, monopolizing practices and customers having to pay extra for functionality that should be an integral part of the cloud solution.

- No fault-tolerance or redundancy built into the service. If the servers hosting your data go offline for any reason, customers will temporarily (or even permanently) lose access to their data. If available, this always costs more or even worse - the customer has to solve it with their deployment.

- No storage encryption. If available, it again often costs extra.

Unigrid is working to address these issues with the Hedgehog network;

- Pricing on the network is kept low by having an open network where any provider of servers can join and provide their hardware to the Unigrid network. The open competition is intended to keep the price as low as possible for the customer storing and deploying data and services.

- To solve the issue of bad redundancy and fault-tolerance, storage blocks and compute blocks are sharded (split into pieces) and propagated throughout gridnodes on the network. When a deployed file or service is referenced by somebody, a fingerprint is sent - that fingerprint tells the network how to query and locate the shards needed to assemble the data.

- To solve the problem of costly storage encryption, encrypting the data is the default policy with any block data stored on the network.

How is data stored and assembled?

Let's Imagine Bob wanting to store some data on the Unigrid network. Bob is either a user that is running Hedgehog, or a gridnode running Hedgehog. Next, Bob sends some data to the network and receives a fingerprint from the gridnode or gridnodes he is communicating with that looks similar to;

67x6AzsJ7RLmd5Y9yWbjzYgSoJNH2JrM1FoN38CNAcad

While there is no central location or repository of these fingerprints, individual nodes on the network maintain information about them and what data they are storing. When Bob request the data, the network is queried and he receives information on how to fetch it (who to ask for the data). Because the data is split into shards, Bob then has to query the network and simultaneously downloads all the individual pieces.

This is where one of the really big advantages of a segmented network storing data in this fashion come into play. Under ideal network conditions, a direct and fast link connected to a speedy server on the other ends is often faster. However, the Internet seldom offers us ideal conditions. In fact, during peak hours, certain routes come under heavy load and have to be heavily traffic shaped in order to even maintain any kind of responsiveness. This leads to slower downloads and general bad performance. Imagine the following scenario where some data is stored in a centralized data center and cloud provider;

Traditionally, data is stored in a data center where there are a few incoming routes. Along the path, there can be several routers that packets have to go through. The more users that are connected to the same path as Bob, the more overloaded and congested it gets, leading to Bob having to wait longer for his data. This creates an unbalanced situation where popular parts of the Internet get more traffic and certain routes and routers are considerably more loaded than others.

Now let us instead look at Hedgehog and how data is accessed via our network;

A shard group is a collection of gridnodes (servers) that are near to each other in terms of latency. Each shard group hosts a small mutable blockchain that stores shards of data. The Unigrid network constantly rebalances and forms these shard groups. A shard of data can be stored in one or multiple shard groups depending on the given circumstances. At first glance, this might look even more congested. However, this is not the case. Even though some routers may still be highly loaded to the point of overloaded, when Bob fetches data, that data is requested from multiple shard groups at the same time instead of downloading a single stream. Furthermore, when data retrieval starts, Bob checks shard group latencies in relation to his location and downloads the data from the shard groups that are most appropriate to be used by him. In some situations this can speed up performance many times over - especially during heavily congested situations.

On a traditional cloud service, if a route or data center goes offline, the data or service can become completely inaccessible for Bob. With the Unigrid network he can downloads shards from routes that are online. If a route, or even a few routes go offline, it does not threaten the integrity or availability of the data. This is because the data is sharded and the fact that there are always several sources hosting that data.

Unigrid vs traditional Web3 Blockchains

Compared to most other blockchain networks on the market, Unigrid and Hedgehog in particular is not a traditional blockchain network by any means. The network does not have any traditional central ledger - this would be inefficient and waste way too much space. We actually have an old article covering how the concept differs that is still an interesting read.

Instead of storing data in a few or just a single blockchain data structure, the network stores the shards in individual shard groups. These shard groups act as tiny segmented networks storing exactly one small blockchain.

Because a shard is assigned to, at most, a few shard groups at once, calling and using the network involves no sync times like with traditional blockchain networks. The storage of data is dependent on the shard groups and gridnodes already on the network rather than all communicating nodes.

Trademark Registration

The UNIGRID trademark was recently registered by us in Sweden. The trademark is registered in the classes 9 and 42, covering cryptocurrency transactions and blockchain as a service. Information about the trademark can be found in the Swedish Trademark Database under application number 2022/05822.

Token Distribution

After many changes, such as tokenomics and the decision to make the new tokens dependent on the new network code, it has taken some time to get to this point. However, we are now finally ready to release Hedgehog and start token distribution to our backers.

As a backer you need to log in to our sale portal and report a wallet address. For a quick tutorial on how to run the wallet, generate an address and report it to the sale portal, please take a look at this previous blog post.

When we mint, we will issue minting blocks into a gridnode spork on the hedgehog network that we call the mint storage. The legacy network uses this information on incoming blocks and mints Unigrid at the block number and to the address specified.

If you need any help, please send an email with questions or join the Telegram and ask for support. Contact information is available on our website.

Getting Involved

We are always looking for people to get involved in development. If you are a hobby developer that wants to sink your teeth into network development and development of decentralized cloud services - send us a ping. You can get in touch with us via email or our official social network. Links are available on our website. Not only is it a lot of fun to work on these code bases, it's also a way for us to find new talent.

Any contributors that catch our attention are a potential hire and we are always looking for good developers looking for a challenge.

Closing Words

This is without a doubt the longest blog post I have ever written on decentralized-internet. Sorry about that. I hope it gives you some insight into what we have been doing for the last couple of months and in what direction development of the network is headed. We still have a lot of work to do, but thanks to your support and the support of all our backers we are making progress and constantly breaking new ground.

I also want to thank everybody for sticking with us. This blog now has nearly 6 000 subscribers and is growing daily. For a nerdy blog about decentralization and cloud computing - that's not bad at all.

Let's keep cracking.